Python Cookbook

标签(空格分隔): Python

Data Structures and Algorithms

Iteration is fundamental to data processing. And when scanning datasets that don’t fit in memory, we need a way to fetch the items lazily, that is, one at a time and on demand. This is what the Iterator pattern is about.

The yield keyword allows the construction of generators, which work as iterators.

Every generator is an iterator: generators fully implement the iterator interface. But an iterator—as defined in the GoF book - retrieves items from a collection.

Every collection in Python is iterable, and iterators are used internally to support:

- for loops

- Collection types construction and extension

- Looping over text files line by line

- List, dict, and set comprehensions

- Tuple unpacking

- Unpacking actual parameters with * in function calls

Sentence Take #1: A Sequence of Words

Example 14-1. sentence.py: A Sentence as a sequence of words.

import re

import reprlib

RE_WORD = re.compile('\w+')

class Sentence:

def __init__(self, text):

self.text = text

self.words = RE_WORD.findall(text)

def __getitem__(self, index):

return self.words[index]

def __len__(self, index):

return len(self.words)

def __repr__(self):

# reprlib.repr is a utility function to generate abbreviated string representations of data structures that can be very large.

return 'Sentence(%s)' % reprlib.repr(self.text)

Example 14-2. Testing iteration on a Sentence instance

>>> s = Sentence('"The time has come," the Walrus said,')

>>> s

Sentence('"The time ha... Walrus said,')

>>> for word in s:

... print(word)

The

time

has

come

the

Walrus

said

>>> list(s)

['The', 'time', 'has', 'come', 'the', 'Walrus', 'said']

>>> s[0]

'The'

>>> s[5]

'Walrus'

>>> s[-1]

'said'

Why Sequences Are Iterable: The iter Function

Whenever the interpreter needs to iterate over an object x, it automatically calls iter(x).

The iter built-in function:

- Checks whether the object implements iter, and calls that to obtain an iterator.

- If __iter__ is not implemented, but __getitem__ is implemented, Python creates an iterator that attempts to fetch items in order, starting from index 0 (zero).

- If that fails, Python raises TypeError, usually saying “C object is not iterable,” where C is the class of the target object.

That is why any Python sequence is iterable: they all implement __getitem__. In fact, the standard sequences also implement __iter__, and yours should too, because the special handling of __getitem__ exists for backward compatibility reasons and may be gone in the future.

An object is considered iterable if it implements the __iter__ method. No subclassing or registration is required.

>>> class Foo:

... def __iter__(self):

... pass

...

>>> from collections import abc

>>> issubclass(Foo, abc.Iterable)

True

>>> f = Foo()

>>> isinstance(f, abc.Iterable)

True

As of Python 3.4, the most accurate way to check whether an object x is iterable is to call iter(x) and handle a TypeError exception if it isn’t. This is more accurate than using isinstance(x, abc.Iterable), because iter(x) also considers the legacy __getitem__ method, while the Iterable ABC does not.

Iterables Versus Iterators

It’s important to be clear about the relationship between iterables and iterators: Python obtains iterators from iterables.

Here is a simple for loop iterating over a str. The str 'ABC' is the iterable here.

>>> s = 'ABC'

>>> for char in s:

... print(char)

...

A

B

C

If there was no for statement and we had to emulate the for machinery by hand with a while loop.

>>> s = 'ABC'

>>> it = iter(s)

>>> while True:

... try:

... print(next(it))

... except StopIteration:

... del it

... break

...

A

B

C

StopIteration signals that the iterator is exhausted. This exception is handled internally in for loops and other iteration contexts like list comprehensions, tuple unpacking, etc.

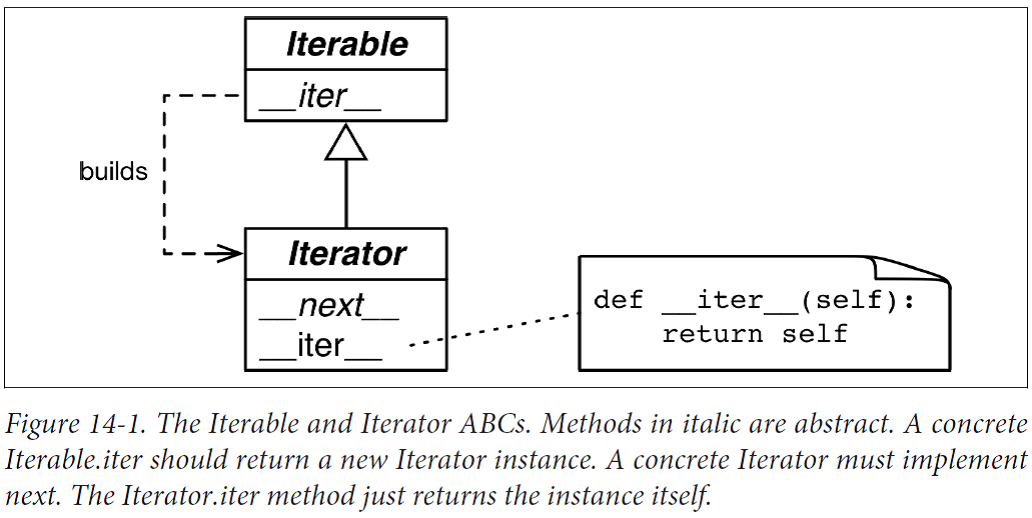

The standard interface for an iterator has two methods:

- __next__: Returns the next available item, raising StopIteration when there are no more items.

- __iter__: Returns self; this allows iterators to be used where an iterable is expected, for example, in a for loop.

Example 14-3. abc.Iterator class; extracted from Lib/_collections_abc.py

class Iterator(Iterable):

__slots__ = ()

@abstractmethod

def __next__(self):

'Return the next item from the iterator. When exhausted, raise StopIteration'

raise StopIteration

def __iter__(self):

return self

@classmethod

def __subclasshook__(cls, C):

if cls is Iterator:

if (any("__next__" in B.__dict__ for B in C.__mro__) and

any("__iter__" in B.__dict__ for B in C.__mro__)):

return True

return NotImplemented

The best way to check if an object x is an iterator is to call isinstance(x, abc.Iterator). Thanks to Iterator.__subclasshook__, this test works even if the class of x is not a real or virtual subclass of Iterator.

You can clearly see how the iterator is built by iter(…) and consumed by next(…) using the Python console.

>>> s3 = Sentence('Pig and Pepper')

>>> it = iter(s3)

>>> it

<iterator object at 0x...>

>>> next(it)

'Pig'

>>> next(it)

'and'

>>> next(it)

'Pepper'

>>> next(it)

Traceback (most recent call last):

...

StopIteration

>>> list(it)

[]

>>> list(iter(s3))

['Pig', 'and', 'Pepper']

Because the only methods required of an iterator are __next__ and __iter__, there is no way to check whether there are remaining items, other than to call next() and catch StopInteration. Also, it’s not possible to “reset” an iterator. If you need to start over, you need to call iter(…) on the iterable that built the iterator in the first place.

- iterator: Any object that implements the __next__ no-argument method that returns the next item in a series or raises StopIteration when there are no more items. Python iterators also implement the __iter__ method so they are iterable as well.

Sentence Take #2: A Classic Iterator

The next Sentence class is built according to the classic Iterator design pattern following the blueprint in the GoF book.

Example 14-4. sentence_iter.py: Sentence implemented using the Iterator pattern.

import re

import reprlib

RE_WORD = re.compile('\w+')

class Sentence:

def __init__(self, text):

self.text = text

self.words = RE_WORD.findall(text)

def __repr__(self):

return 'Sentence(%s)' % reprlib.repr(self.text)

def __iter__(self):

# __iter__ fulfills the iterable protocol by instantiating and returning an iterator.

return SentenceIterator(self.words)

class SentenceIterator:

def __init__(self, words):

self.words = words

self.index = 0

def __next__(self):

try:

word = self.words[self.index]

except IndexError:

raise StopIteration()

self.index += 1

return word

def __iter__(self):

return self

Note that implementing __iter__ in SentenceIterator is not actually needed for this example to work, but the it’s the right thing to do: iterators are supposed to implement both __next__ and __iter__, and doing so makes our iterator pass the issubclass(SentenceInterator, abc.Iterator) test.

Making Sentence an Iterator: Bad Idea

A common cause of errors in building iterables and iterators is to confuse the two. To be clear: iterables have an __iter__ method that instantiates a new iterator every time. Iterators implement a __next__ method that returns individual items, and an __iter__ method that returns self.

Therefore, iterators are also iterable, but iterables are not iterators.

It may be tempting to implement __next__ in addition to __iter__ in the Sentence class, making each Sentence instance at the same time an iterable and iterator over itself. But this is a terrible idea.

A proper implementation of the pattern requires each call to iter(my_itera ble) to create a new, independent, iterator. That is why we need the SentenceIterator class in this example.

An iterable should never act as an iterator over itself. In other words, iterables must implement __iter__, but not __next__. On the other hand, for convenience, iterators should be iterable. An iterator’s __iter__ should just return self.

Sentence Take #3: A Generator Function

A Pythonic implementation of the same functionality uses a generator function to replace the SequenceIterator class.

Example 14-5. sentence_gen.py: Sentence implemented using a generator function.

import re

import reprlib

RE_WORD = re.compile('\w+')

class Sentence:

def __init__(self, text):

self.text = text

self.words = RE_WORD.findall(text)

def __repr__(self):

return 'Sentence(%s)' % reprlib.repr(self.text)

def __iter__(self):

for word in self.words:

yield word

return #This return is not needed

How a Generator Function Works

Any Python function that has the yield keyword in its body is a generator function.

Here is the simplest function useful to demonstrate the behavior of a generator:

>>> def gen_123():

... yield 1

... yield 2

... yield 3

...

>>> gen_123

<function gen_123 at 0x...>

>>> gen_123()

<generator object gen_123 at 0x...>

>>> for i in gen_123():

... print(i)

1

2

3

>>> g = gen_123()

>>> next(g)

1

>>> next(g)

2

>>> next(g)

3

>>> next(g)

Traceback (most recent call last):

...

StopIteration

When we invoke next(…) on the generator object, execution advances to the next yield in the function body, and the next(…) call evaluates to the value yielded when the function body is suspended.

Example 14-6. A generator function that prints messages when it runs.

>>> def gen_AB():

... print('start')

... yield 'A'

... print('continue')

... yield 'B'

... print('end.')

...

>>> for c in gen_AB():

... print('-->', c)

...

start

--> A

continue

--> B

end.

In Example 14-5 __iter__ is a generator function which, when called, builds a generator object that implements the iterator interface, so the SentenceIterator class is no longer needed.

This second version of Sentence is much shorter than the first, but it’s not as lazy as it could be. Nowadays, laziness is considered a good trait, at least in programming languages and APIs. A lazy implementation postpones producing values to the last possible moment. This saves memory and may avoid useless processing as well.

Sentence Take #4: A Lazy Implementation

The Iterator interface is designed to be lazy: next(my_iterator) produces one item at a time.

Our Sentence implementations so far have not been lazy because the __init__ eagerly builds a list of all words in the text, binding it to the self.words attribute. This will entail processing the entire text, and the list may use as much memory as the text itself.

The re.finditer function is a lazy version of re.findall which, instead of a list, returns a generator producing re.MatchObject instances on demand.

Example 14-7. sentence_gen2.py: Sentence implemented using a generator function calling the re.finditer generator function.

import re

import reprlib

RE_WORD = re.compile('\w+')

class Sentence:

def __init__(self, text):

self.text = text

def __repr__(self):

return 'Sentence(%s)' % reprlib.repr(self.text)

def __iter__(self):

for match in RE_WORD.finditer(self.text):

yield match.group()

Generator functions are an awesome shortcut, but the code can be made even shorter with a generator expression.

Sentence Take #5: A Generator Expression

A generator expression can be understood as a lazy version of a list comprehension. In other words, if a list comprehension is a factory of lists, a generator expression is a factory of generators.

Example 14-8. The gen_AB generator function is used by a list comprehension, then by a generator expression.

>>> def gen_AB():

... print('start')

... yield 'A'

... print('continue')

... yield 'B'

... print('end.')

...

>>> res1 = [x*3 for x in gen_AB()]

start

continue

end.

>>> for i in res1:

... print('-->', i)

...

--> AAA

--> BBB

>>> res2 = (x*3 for x in gen_AB())

>>> res2

<generator object <genexpr> at 0x10063c240>

>>> for i in res2:

... print('-->', i)

...

start

--> AAA

continue

--> BBB

end.

Example 14-9. sentence_genexp.py: Sentence implemented using a generator expression.

import re

import reprlib

RE_WORD = re.compile('\w+')

class Sentence:

def __init__(self, text):

self.text = text

def __repr__(self):

return 'Sentence(%s)' % reprlib.repr(self.text)

def __iter__(self):

return (match.group() for match in RE_WORD.finditer(self.text))

Generator Expressions: When to Use Them

In Example 14-9, we saw that a generator expression is a syntactic shortcut to create a generator without defining and calling a function. On the other hand, generator functions are much more flexible: you can code complex logic with multiple statements, and can even use them as coroutines(协同程序).

Another Example: Arithmetic Progression Generator

Example 14-11. The ArithmeticProgression class.

class ArithmeticProgression:

def __init__(self, begin, step, end=None):

self.begin = begin

self.step = step

self.end = end # None -> "infinite" series

def __iter__(self):

result = type(self.begin + self.step)(self.begin)

forever = self.end is None

index = 0

while forever or result < self.end:

yield result

index += 1

result = self.begin + self.step * index

Example 14-10. Demonstration of an ArithmeticProgression class.

>>> ap = ArithmeticProgression(0, 1, 3)

>>> list(ap)

[0, 1, 2]

>>> ap = ArithmeticProgression(1, .5, 3)

>>> list(ap)

[1.0, 1.5, 2.0, 2.5]

>>> ap = ArithmeticProgression(0, 1/3, 1)

>>> list(ap)

[0.0, 0.3333333333333333, 0.6666666666666666]

>>> from fractions import Fraction

>>> ap = ArithmeticProgression(0, Fraction(1, 3), 1)

>>> list(ap)

[Fraction(0, 1), Fraction(1, 3), Fraction(2, 3)]

>>> from decimal import Decimal

>>> ap = ArithmeticProgression(0, Decimal('.1'), .3)

>>> list(ap)

[Decimal('0.0'), Decimal('0.1'), Decimal('0.2')]

Example 14-12 shows a generator function called aritprog_gen that does the same job as ArithmeticProgression but with less code.

Example 14-12. The aritprog_gen generator function

def aritprog_gen(begin, step, end=None):

result = type(begin + step)(begin)

forever = end is None

index = 0

while forever or result < end:

yield result

index += 1

result = begin + step * index

There are plenty of ready-to-use generators in the standard library, and the next section will show an even cooler implementation using the itertools module.

Arithmetic Progression with itertools

The itertools module in Python 3.4 has 19 generator functions that can be combined in a variety of interesting ways.

For example, the itertools.count function returns a generator that produces numbers.

>>> import itertools

>>> gen = itertools.count(1, .5)

>>> next(gen)

1

>>> next(gen)

1.5

>>> next(gen)

2.0

>>> next(gen)

2.5

However, itertools.count never stops, so if you call list(count()), Python will try to build a list larger than available memory and your machine will be very grumpy long before the call fails.

On the other hand, there is the itertools.takewhile function: it produces a generator that consumes another generator and stops when a given predicate evaluates to False.

>>> gen = itertools.takewhile(lambda n: n < 3, itertools.count(1, .5))

>>> list(gen)

[1, 1.5, 2.0, 2.5]

Example 14-13. aritprog_v3.py: this works like the previous aritprog_gen functions.

import itertools

def aritprog_gen(begin, step, end=None):

first = type(begin + step)(begin)

ap_gen = itertools.count(first, step)

if end is not None:

ap_gen = itertools.takewhile(lambda n: n < end, ap_gen)

return ap_gen

When implementing generators, know what is available in the standard library, otherwise there’s a good chance you’ll reinvent the wheel.

Generator Functions in the Standard Library

The os.walk generator function is impressive.

I summarize two dozen of them, from the built-in, itertools, and functools modules.

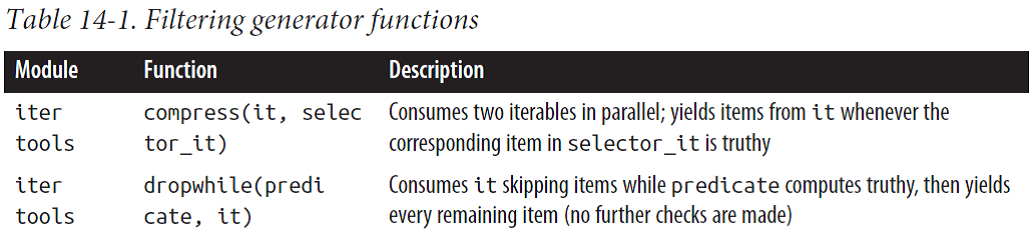

The first group are filtering generator functions: they yield a subset of items produced by the input iterable, without changing the items themselves.

Example 14-14. Filtering generator functions examples.

>>> def vowel(c):

... return c.lower() in 'aeiou'

...

>>> list(filter(vowel, 'Aardvark'))

['A', 'a', 'a']

>>> import itertools

>>> list(itertools.filterfalse(vowel, 'Aardvark'))

['r', 'd', 'v', 'r', 'k']

>>> list(itertools.dropwhile(vowel, 'Aardvark'))

['r', 'd', 'v', 'a', 'r', 'k']

>>> list(itertools.takewhile(vowel, 'Aardvark'))

['A', 'a']

>>> list(itertools.compress('Aardvark', (1,0,1,1,0,1)))

['A', 'r', 'd', 'a']

>>> list(itertools.islice('Aardvark', 4))

['A', 'a', 'r', 'd']

>>> list(itertools.islice('Aardvark', 4, 7))

['v', 'a', 'r']

>>> list(itertools.islice('Aardvark', 1, 7, 2))

['a', 'd', 'a']

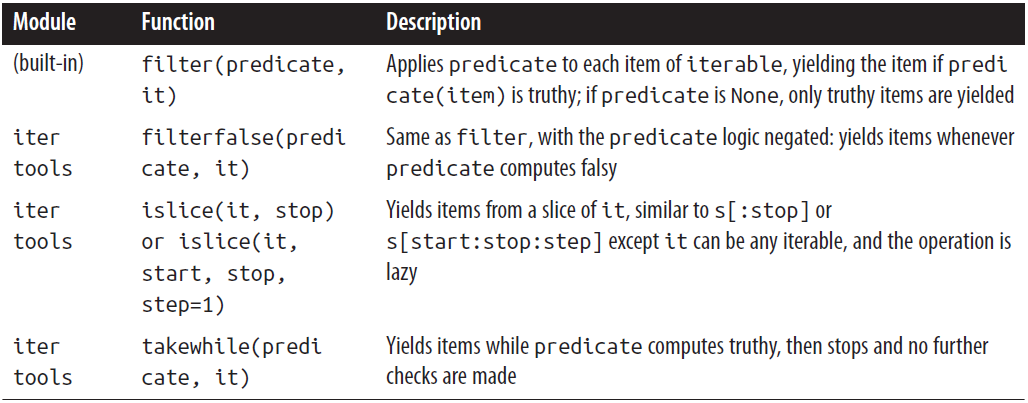

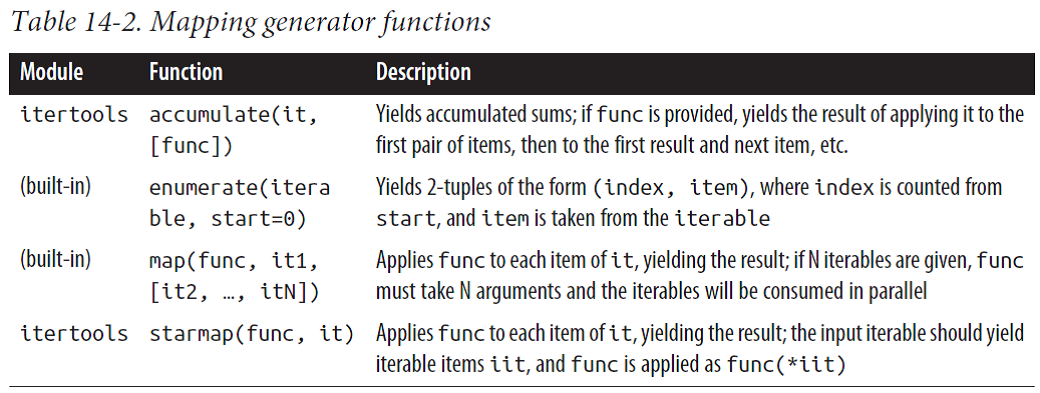

The next group are the mapping generators: they yield items computed from each individual item in the input iterable—or iterables, in the case of map and starmap.

Example 14-15. itertools.accumulate generator function examples.

>>> sample = [5, 4, 2, 8, 7, 6, 3, 0, 9, 1]

>>> import itertools

>>> list(itertools.accumulate(sample))

[5, 9, 11, 19, 26, 32, 35, 35, 44, 45]

>>> list(itertools.accumulate(sample, min))

[5, 4, 2, 2, 2, 2, 2, 0, 0, 0]

>>> list(itertools.accumulate(sample, max))

[5, 5, 5, 8, 8, 8, 8, 8, 9, 9]

>>> import operator

>>> list(itertools.accumulate(sample, operator.mul))

[5, 20, 40, 320, 2240, 13440, 40320, 0, 0, 0]

>>> list(itertools.accumulate(range(1, 11), operator.mul))

[1, 2, 6, 24, 120, 720, 5040, 40320, 362880, 3628800]

Example 14-16. Mapping generator function examples.

>>> list(enumerate('albatroz', 1))

[(1, 'a'), (2, 'l'), (3, 'b'), (4, 'a'), (5, 't'), (6, 'r'), (7, 'o'), (8, 'z')]

>>> import operator

>>> list(map(operator.mul, range(11), range(11)))

[0, 1, 4, 9, 16, 25, 36, 49, 64, 81, 100]

>>> list(map(operator.mul, range(11), [2, 4, 8]))

[0, 4, 16]

>>> list(map(lambda a, b: (a, b), range(11), [2, 4, 8]))

[(0, 2), (1, 4), (2, 8)]

>>> import itertools

>>> list(itertools.starmap(operator.mul, enumerate('albatroz', 1)))

['a', 'll', 'bbb', 'aaaa', 'ttttt', 'rrrrrr', 'ooooooo', 'zzzzzzzz']

>>> sample = [5, 4, 2, 8, 7, 6, 3, 0, 9, 1]

>>> list(itertools.starmap(lambda a, b: b/a,

... enumerate(itertools.accumulate(sample), 1)))

[5.0, 4.5, 3.6666666666666665, 4.75, 5.2, 5.333333333333333, 5.0, 4.375, 4.888888888888889, 4.5]

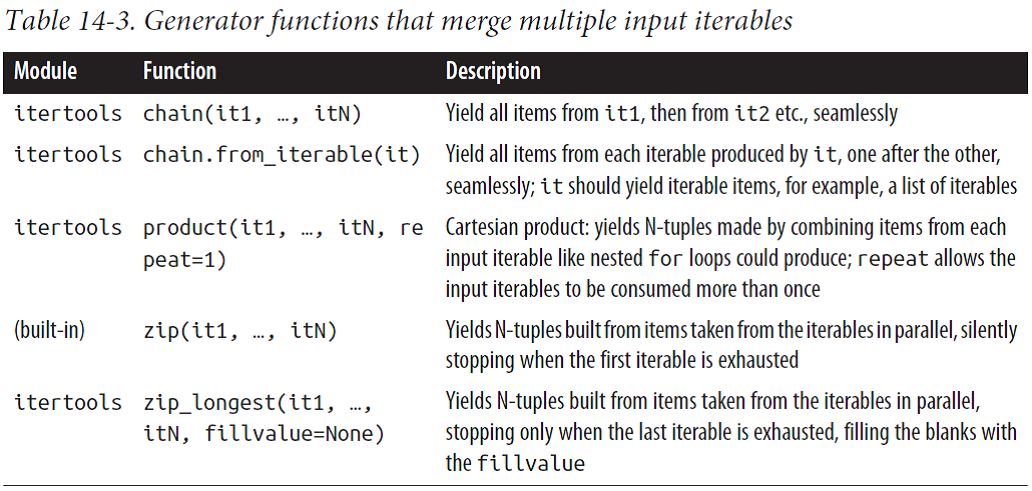

Next, we have the group of merging generators—all of these yield items from multiple input iterables. chain and chain.from_iterable consume the input iterables sequentially (one after the other), while product, zip, and zip_longest consume the input iterables in parallel.

Example 14-17. Merging generator function examples.

>>> list(itertools.chain('ABC', range(2)))

['A', 'B', 'C', 0, 1]

>>> list(itertools.chain(enumerate('ABC')))

[(0, 'A'), (1, 'B'), (2, 'C')]

>>> list(itertools.chain.from_iterable(enumerate('ABC')))

[0, 'A', 1, 'B', 2, 'C']

>>> list(zip('ABC', range(5)))

[('A', 0), ('B', 1), ('C', 2)]

>>> list(zip('ABC', range(5), [10, 20, 30, 40]))

[('A', 0, 10), ('B', 1, 20), ('C', 2, 30)]

>>> list(itertools.zip_longest('ABC', range(5)))

[('A', 0), ('B', 1), ('C', 2), (None, 3), (None, 4)]

>>> list(itertools.zip_longest('ABC', range(5), fillvalue='?'))

[('A', 0), ('B', 1), ('C', 2), ('?', 3), ('?', 4)]

Example 14-18. itertools.product generator function examples.

>>> list(itertools.product('ABC', range(2)))

[('A', 0), ('A', 1), ('B', 0), ('B', 1), ('C', 0), ('C', 1)]

>>> suits = 'spades hearts diamonds clubs'.split()

>>> list(itertools.product('AK', suits))

[('A', 'spades'), ('A', 'hearts'), ('A', 'diamonds'), ('A', 'clubs'), ('K', 'spades'), ('K', 'hearts'), ('K', 'diamonds'), ('K', 'clubs')]

>>> list(itertools.product('ABC'))

[('A',), ('B',), ('C',)]

>>> list(itertools.product('ABC', repeat=2))

[('A', 'A'), ('A', 'B'), ('A', 'C'), ('B', 'A'), ('B', 'B'), ('B', 'C'), ('C', 'A'), ('C', 'B'), ('C', 'C')]

>>> list(itertools.product(range(2), repeat=3))

[(0, 0, 0), (0, 0, 1), (0, 1, 0), (0, 1, 1), (1, 0, 0), (1, 0, 1), (1, 1, 0), (1, 1, 1)]

>>> rows = itertools.product('AB', range(2), repeat=2)

>>> for row in rows: print(row)

...

('A', 0, 'A', 0)

('A', 0, 'A', 1)

('A', 0, 'B', 0)

('A', 0, 'B', 1)

('A', 1, 'A', 0)

('A', 1, 'A', 1)

('A', 1, 'B', 0)

('A', 1, 'B', 1)

('B', 0, 'A', 0)

('B', 0, 'A', 1)

('B', 0, 'B', 0)

('B', 0, 'B', 1)

('B', 1, 'A', 0)

('B', 1, 'A', 1)

('B', 1, 'B', 0)

('B', 1, 'B', 1)

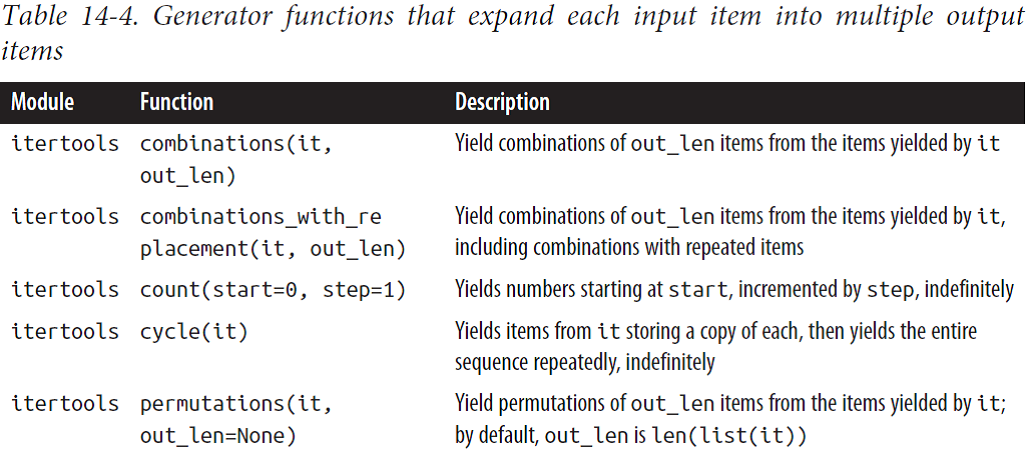

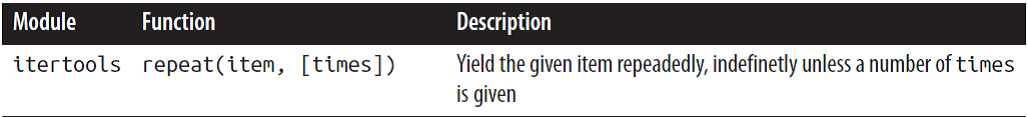

Some generator functions expand the input by yielding more than one value per input item.

Example 14-19. count, cycle, and repeat.

>>> ct = itertools.count()

>>> next(ct)

0

>>> next(ct), next(ct), next(ct)

(1, 2, 3)

>>> list(itertools.islice(itertools.count(1, .3), 3))

[1, 1.3, 1.6]

>>> cy = itertools.cycle('ABC')

>>> next(cy)

'A'

>>> list(itertools.islice(cy, 7))

['B', 'C', 'A', 'B', 'C', 'A', 'B']

>>> rp = itertools.repeat(7)

>>> next(rp), next(rp)

(7, 7)

>>> list(itertools.repeat(8, 4))

[8, 8, 8, 8]

>>> list(map(operator.mul, range(11), itertools.repeat(5)))

[0, 5, 10, 15, 20, 25, 30, 35, 40, 45, 50]

Example 14-20. Combinatoric generator functions yield multiple values per input item.

>>> list(itertools.combinations('ABC', 2))

[('A', 'B'), ('A', 'C'), ('B', 'C')]

>>> list(itertools.combinations_with_replacement('ABC', 2))

[('A', 'A'), ('A', 'B'), ('A', 'C'), ('B', 'B'), ('B', 'C'), ('C', 'C')]

>>> list(itertools.permutations('ABC', 2))

[('A', 'B'), ('A', 'C'), ('B', 'A'), ('B', 'C'), ('C', 'A'), ('C', 'B')]

>>> list(itertools.product('ABC', repeat=2))

[('A', 'A'), ('A', 'B'), ('A', 'C'), ('B', 'A'), ('B', 'B'), ('B', 'C'), ('C', 'A'), ('C', 'B'), ('C', 'C')]

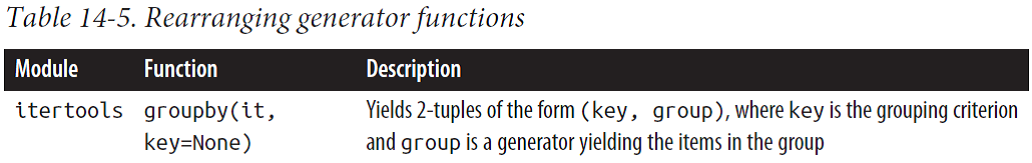

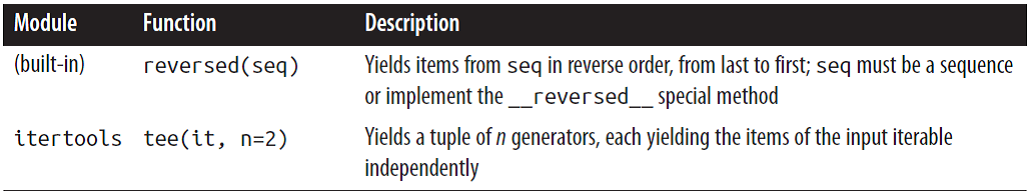

The last group of generator functions we’ll cover in this section are designed to yield all items in the input iterables, but rearranged in some way.

Example 14-21. itertools.groupby.

>>> list(itertools.groupby('LLLLAAGGG'))

[('L', <itertools._grouper object at 0x102227cc0>),

('A', <itertools._grouper object at 0x102227b38>),

('G', <itertools._grouper object at 0x102227b70>)]

>>> for char, group in itertools.groupby('LLLLAAAGG'):

... print(char, '->', list(group))

...

L -> ['L', 'L', 'L', 'L']

A -> ['A', 'A',]

G -> ['G', 'G', 'G']

>>> animals = ['duck', 'eagle', 'rat', 'giraffe', 'bear',

... 'bat', 'dolphin', 'shark', 'lion']

>>> animals.sort(key=len)

>>> animals

['rat', 'bat', 'duck', 'bear', 'lion', 'eagle', 'shark', 'giraffe', 'dolphin']

>>> for length, group in itertools.groupby(animals, len):

... print(length, '->', list(group))

...

3 -> ['rat', 'bat']

4 -> ['duck', 'bear', 'lion']

5 -> ['eagle', 'shark']

7 -> ['giraffe', 'dolphin']

>>> for length, group in itertools.groupby(reversed(animals), len):

... print(length, '->', list(group))

...

7 -> ['dolphin', 'giraffe']

5 -> ['shark', 'eagle']

4 -> ['lion', 'bear', 'duck']

3 -> ['bat', 'rat']

Example 14-22. itertools.tee yields multiple generators, each yielding every item of the input generator.

>>> list(itertools.tee('ABC'))

[<itertools._tee object at 0x10222abc8>, <itertools._tee object at 0x10222ac08>]

>>> g1, g2 = itertools.tee('ABC')

>>> next(g1)

'A'

>>> next(g2)

'A'

>>> next(g2)

'B'

>>> list(g1)

['B', 'C']

>>> list(g2)

['C']

>>> list(zip(*itertools.tee('ABC')))

[('A', 'A'), ('B', 'B'), ('C', 'C')]

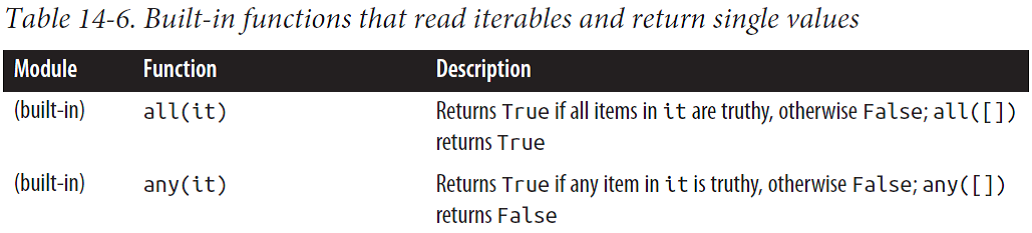

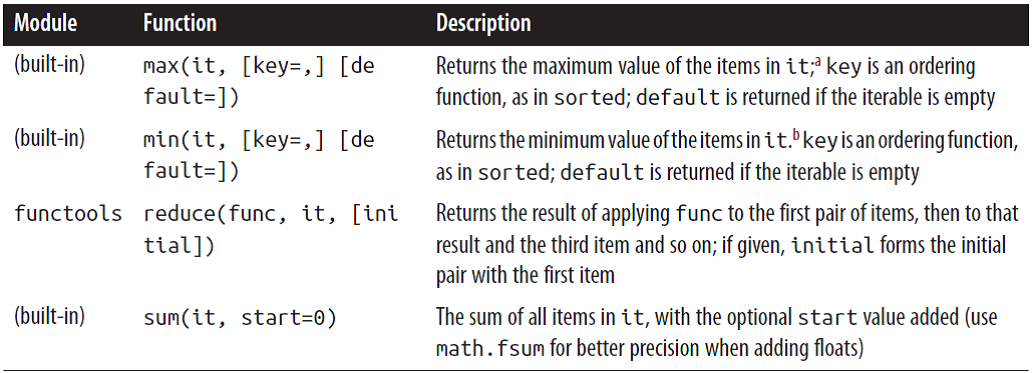

Iterable Reducing Functions

The functions in Table 14-6 all take an iterable and return a single result. Actually, every one of the builtins listed here can be implemented with functools.reduce, but they exist as built-ins because they address some common use cases more easily.

Example 14-23. Results of all and any for some sequences.

>>> all([1, 2, 3])

True

>>> all([1, 0, 3])

False

>>> all([])

True

>>> any([1, 2, 3])

True

>>> any([1, 0, 3])

True

>>> any([0, 0.0])

False

>>> any([])

False

>>> g = (n for n in [0, 0.0, 7, 8])

>>> any(g)

True

>>> next(g)

8

A Closer Look at the iter Function

It can be called with two arguments to create an iterator from a regular function or any callable object. In this usage, the first argument must be a callable to be invoked repeatedly (with no arguments) to yield values, and the second argument is a sentinel: a marker value which, when returned by the callable, causes the iterator to raise StopIteration instead of yielding the sentinel.

The following example shows how to use iter to roll a six-sided die until a 1 is rolled:

>>> def d6():

... return randint(1, 6)

...

>>> d6_iter = iter(d6, 1)

>>> d6_iter

<callable_iterator object at 0x00000000029BE6A0>

>>> for roll in d6_iter:

... print(roll)

...

4

3

6

3

A useful example is found in the iter built-in function documentation. This snippet reads lines from a file until a blank line is found or the end of file is reached:

with open('mydata.txt') as fp:

for line in iter(fp.readline, ''):

process_line(line)

Context Managers and else Blocks

Context Managers and with Blocks

Context manager objects exist to control a with statement, just like iterators exist to control a for statement.

The with statement was designed to simplify the try/finally pattern, which guarantees that some operation is performed after a block of code, even if the block is aborted because of an exception, a return or sys.exit() call. The code in the finally clause usually releases a critical resource or restores some previous state that was temporarily changed.

The most common example is making sure a file object is closed.

Example 15-1. Demonstration of a file object as a context manager.

>>> with open('mirror.py') as fp:

... src = fp.read(60)

...

>>> len(src)

60

>>> fp

<_io.TextIOWrapper name='mirror.py' mode='r' encoding='UTF-8'>

>>> fp.closed, fp.encoding

(True, 'UTF-8')

>>> fp.read(60)

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

ValueError: I/O operation on closed file.

Concurrency with Futures

This chapter focuses on the concurrent.futures library introduced in Python 3.2.

Example: Web Downloads in Three Styles

To handle network I/O efficiently, you need concurrency(并发性), as it involves high latency — so instead of wasting CPU cycles waiting, it’s better to do something else until a response comes back from the network.

I wrote three simple programs to download images of 20 country flags from the Web.

The first one, flags.py, runs sequentially: it only requests the next image when the previous one is downloaded and saved to disk. The other two scripts make concurrent downloads: they request all images practically at the same time, and save the files as they arrive. The flags_threadpool.py script uses the concurrent.futures package, while flags_asyncio.py uses asyncio.

A Sequential Download Script

Example 17-2. flags.py: sequential download script; some functions will be reused by the other scripts.

import os

import time

import sys

import requests

POP20_CC = ('CN IN US ID BR PK NG BD RU JP '

'MX PH VN ET EG DE IR TR CD FR').split()

BASE_URL = 'http://flupy.org/data/flags'

DEST_DIR = 'downloads/'

def save_flag(img, filename):

path = os.path.join(DEST_DIR, filename)

with open(path, 'wb') as fp:

fp.write(img)

def get_flag(cc):

url = '{}/{cc}/{cc}.gif'.format(BASE_URL, cc=cc.lower())

resp = requests.get(url)

return resp.content

def show(text):

print(text, end=' ')

sys.stdout.flush()

def download_many(cc_list):

for cc in sorted(cc_list):

image = get_flag(cc)

show(cc)

save_flag(image, cc.lower() + '.gif')

return len(cc_list)

def main(download_many):

t0 = time.time()

count = download_many(POP20_CC)

elapsed = time.time() - t0

msg = '\n{} flags downloaded in {:.2f}s'

print(msg.format(count, elapsed))

if __name__ == '__main__':

main(download_many)

The requests library by Kenneth Reitz is available on PyPI and is more powerful and easier to use than the urllib.request module from the Python 3 standard library.

Downloading with concurrent.futures

The main features of the concurrent.futures package are the ThreadPoolExecutor and ProcessPoolExecutor classes, which implement an interface that allows you to submit callables for execution in different threads or processes, respectively.

Example 17-3. flags_threadpool.py: threaded download script using futures.ThreadPoolExecutor.

rom concurrent import futures

from flags import save_flag, get_flag, show, main

MAX_WORKERS = 20

def download_one(cc):

image = get_flag(cc)

show(cc)

save_flag(image, cc.lower() + '.gif')

return cc

def download_many(cc_list):

# Set the number of worker threads: use the smaller number between the maximum we want to allow (MAX_WORKERS) and the actual items to be processed, so no unnecessary threads are created.

workers = min(MAX_WORKERS, len(cc_list))

# Instantiate the ThreadPoolExecutor with that number of worker threads; the executor.__exit__ method will call executor.shutdown(wait=True), which will block until all threads are done.

with futures.ThreadPoolExecutor(workers) as executor:

# The map method is similar to the map built-in, except that the download_one function will be called concurrently from multiple threads; it returns a generator that can be iterated over to retrieve the value returned by each function.

res = executor.map(download_one, sorted(cc_list))

return len(list(res))

if __name__ == '__main__':

main(download_many)